|

|

Xi'an Jiaotong University, China

|

|

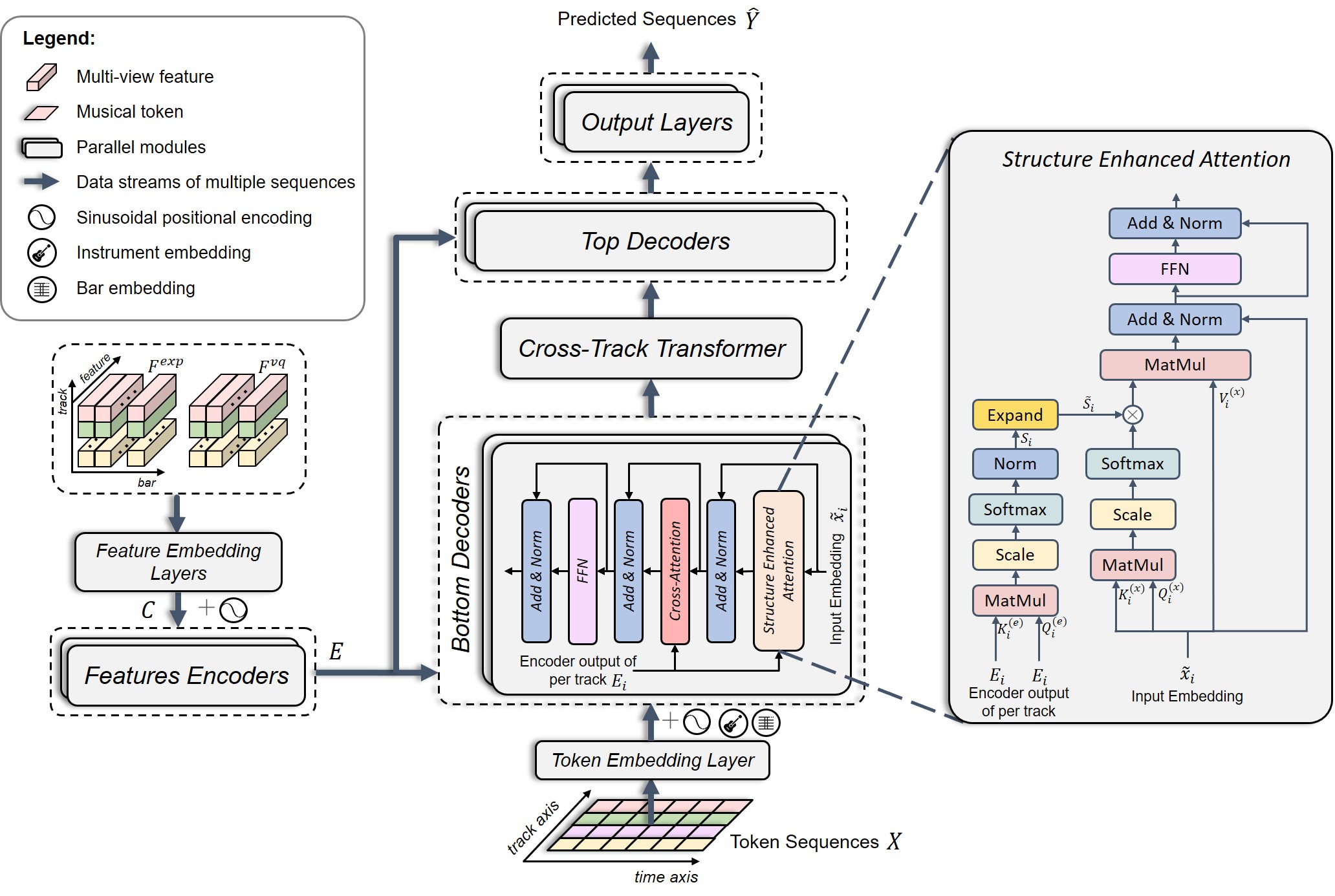

Conditional music generation offers significant advantages in terms of user convenience and control, presenting great potential in AI-generated content research. However, building conditional generative systems for multitrack popular songs presents three primary challenges: insufficient fidelity of input conditions, poor structural modeling, and inadequate inter-track harmony learning in generative models. To address these issues, we propose BandCondiNet, a conditional model based on parallel Transformers, designed to process the multiple music sequences and generate high-quality multitrack samples. Specifically, we propose multi-view features across time and instruments as high-fidelity conditions. Moreover, we propose two specialized modules for BandCondiNet: Structure Enhanced Attention (SEA) to strengthen the musical structure, and Cross-Track Transformer (CTT) to enhance inter-track harmony. We conducted both objective and subjective evaluations on two popular music datasets with different sequence lengths. Objective results on the shorter dataset show that BandCondiNet outperforms other conditional models in 9 out of 10 metrics related to fidelity and inference speed>, with the exception of Chord Accuracy. On the longer dataset, BandCondiNet surpasses all conditional models across all 10 metrics. Subjective evaluations across four criteria reveal that BandCondiNet trained on the shorter dataset performs best in Richness and performs comparably to state-of-the-art models in the other three criteria, while significantly outperforming them across all criteria when trained on the longer dataset. To further expand the application scope of BandCondiNet, future work should focus on developing an advanced conditional model capable of adapting to more user-friendly input conditions and supporting flexible instrumentation. |

|

We conduct experiments on a popular music subset of the LakhMIDI dataset(LMD), which is one of the largest publicly available symbolic music dataset that contains multiple instruments. |

|

The source code is available at here. |

|

Please consider citing the following article if you found our work useful: |

|

|

|

Note: In the following MIDI players, the Melody track, the Drum sets track are respectively visualized in magenta and grey. |

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandCondiNet

|