|

|

Xi'an Jiaotong University, China

|

|

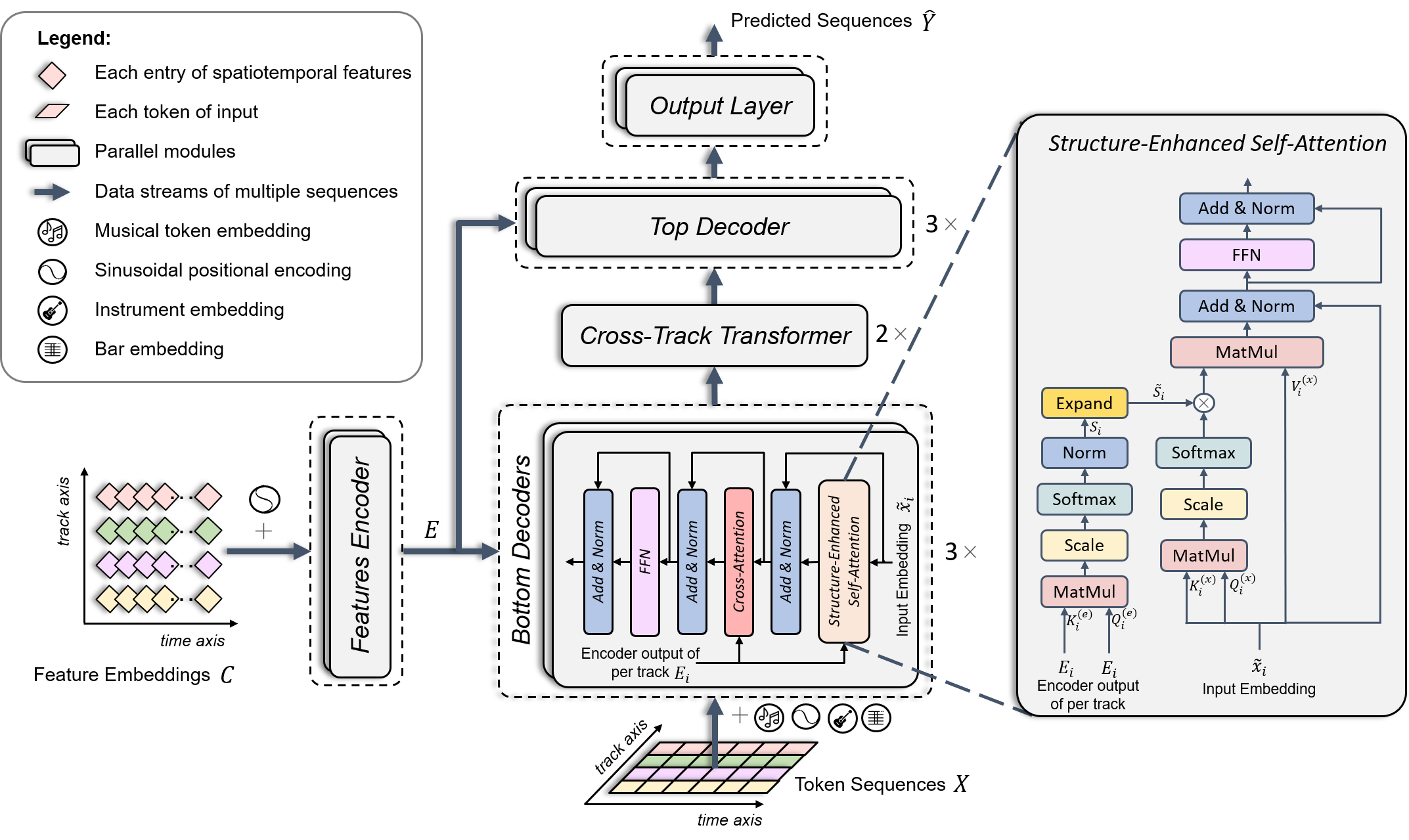

Controllable music generation promotes the interaction between humans and composition systems by projecting the users' intent on their desired music. The challenge of introducing controllability is an increasingly important issue in the symbolic music generation field. When building controllable generative popular multi-instrument music systems, two main challenges typically present themselves, namely weak controllability and poor music quality. To address these issues, we first propose spatiotemporal features as powerful and fine-grained controls to enhance the controllability of the generative model. In addition, an efficient music representation called REMI_Track is designed to convert multitrack music into multiple parallel music sequences and shorten the sequence length of each track with Byte Pair Encoding (BPE) techniques. Subsequently, we release BandControlNet, a conditional model based on parallel Transformers, to tackle the multiple music sequences and generate high-quality music samples that are conditioned to the given spatiotemporal control features. More concretely, the two specially designed modules of BandControlNet, namely structure-enhanced self-attention (SE-SA) and Cross-Track Transformer (CTT), are utilized to strengthen the resulting musical structure and inter-track harmony modeling respectively. Experimental results tested on two popular music datasets of different lengths demonstrate that the proposed BandControlNet outperforms other conditional music generation models on most objective metrics in terms of fidelity and inference speed and shows great robustness in generating long music samples. The subjective evaluations show BandControlNet trained on short datasets can generate music with comparable quality to state-of-the-art models, while outperforming them significantly using longer datasets. |

|

We conduct experiments on a popular music subset of the LakhMIDI dataset(LMD), which is the largest publicly available symbolic music dataset that contains multiple instruments. |

|

The source code is available at here. |

|

|

|

Note: In the following MIDI players, the Melody track, the Drum sets track are respectively visualized in magenta and grey. |

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|

|

|

|

Reference

|

|

FIGARO

|

|

BandControlNet

|